create a PD patch that incorporates

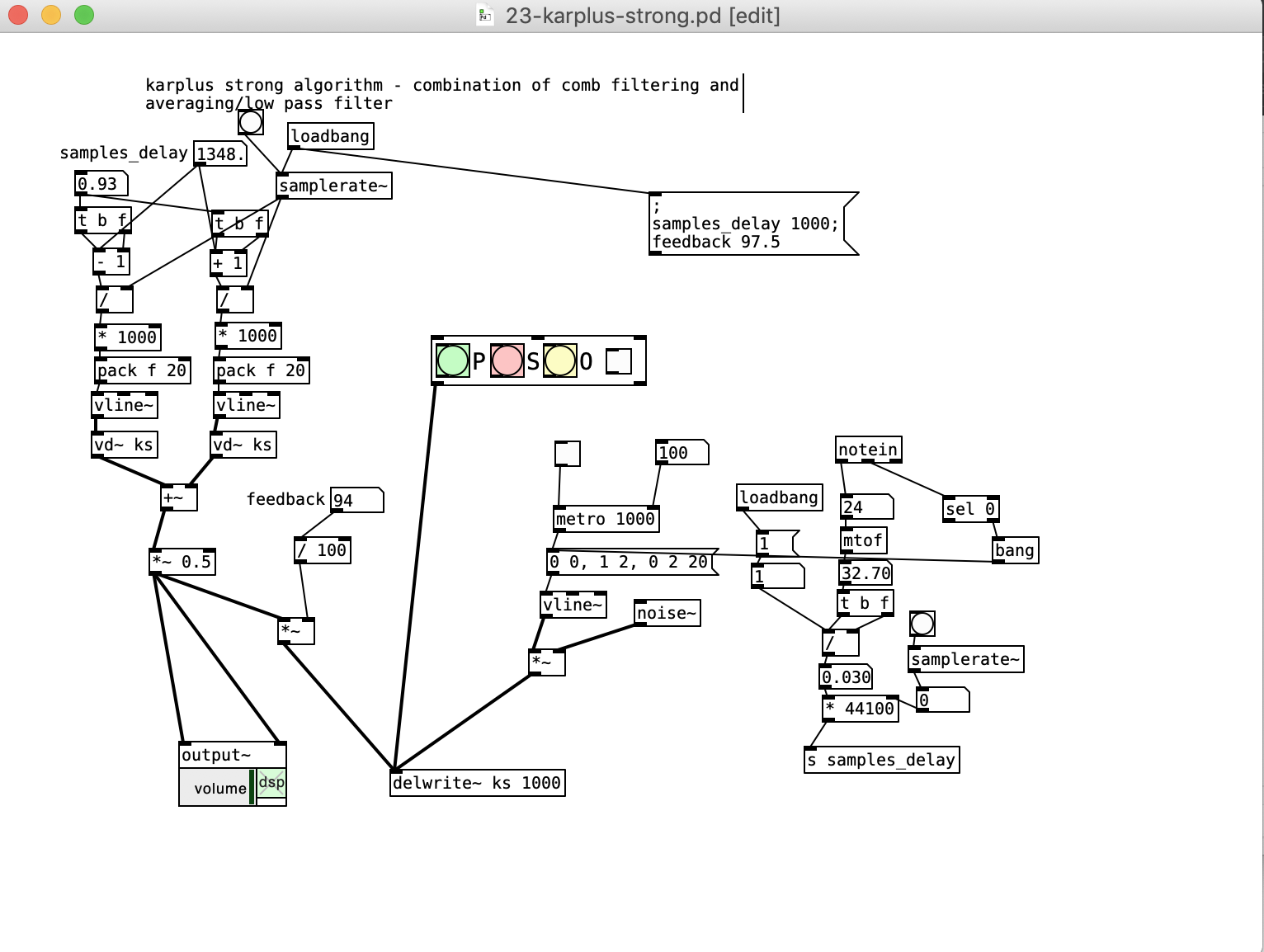

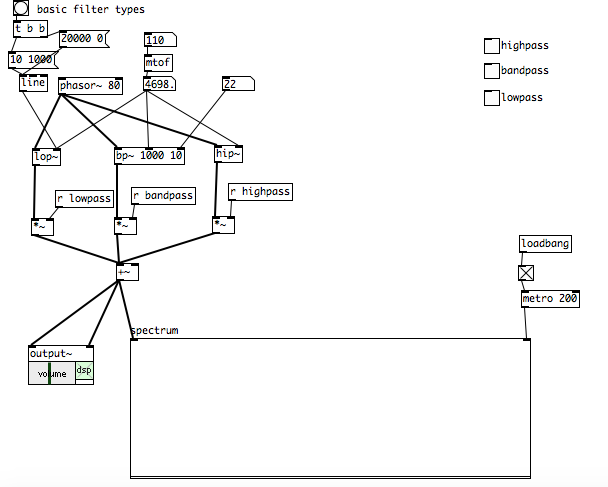

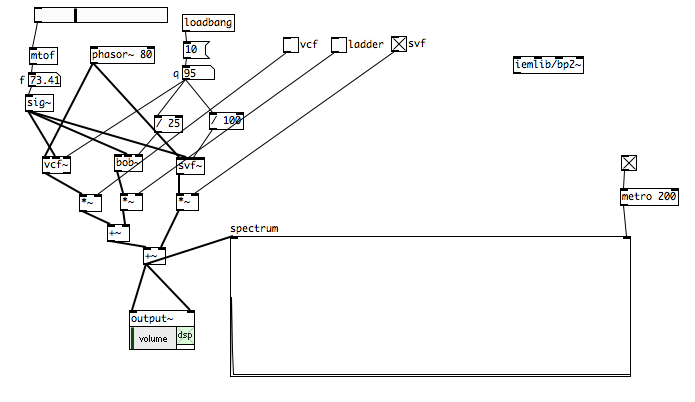

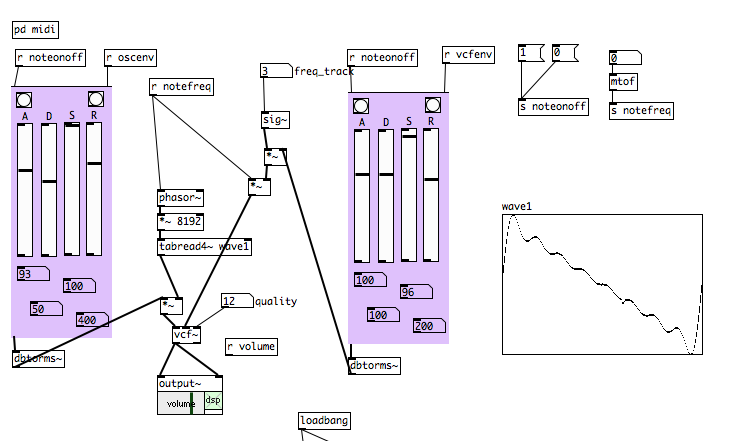

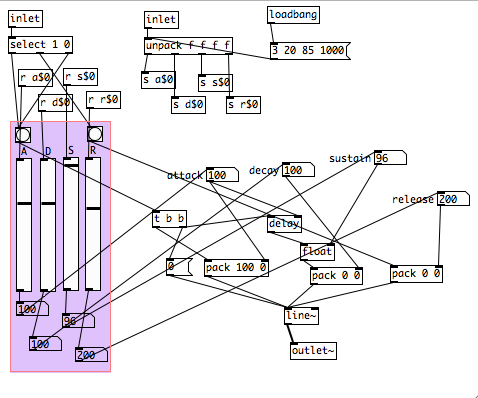

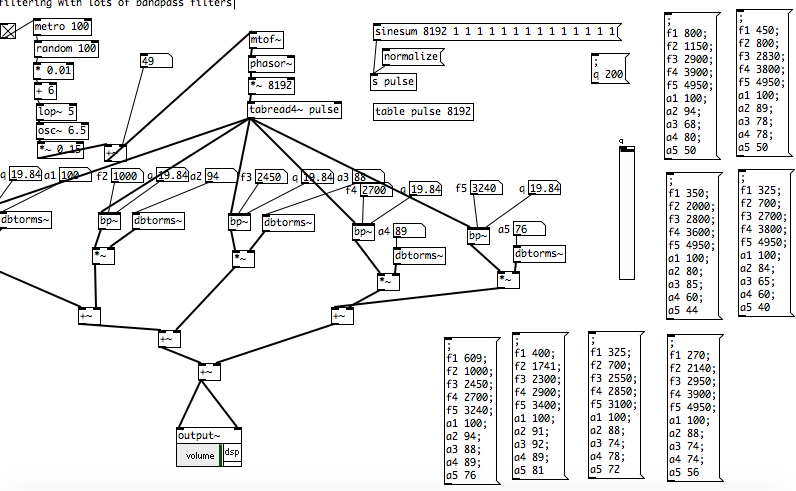

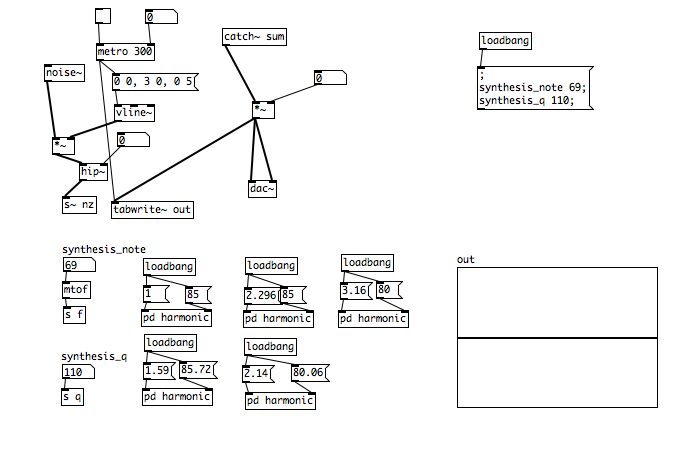

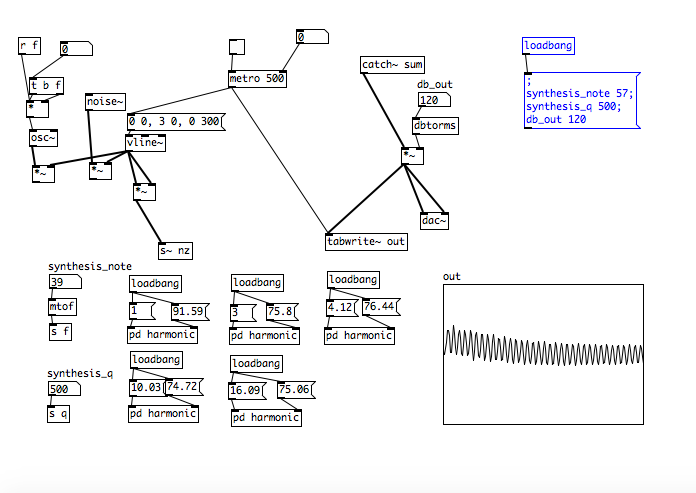

1) a polyphonic synthesizer based on multiple wavetable oscillators and 2 modulated filters

2) a multisample player that plays percussive and vocal or environmental sounds

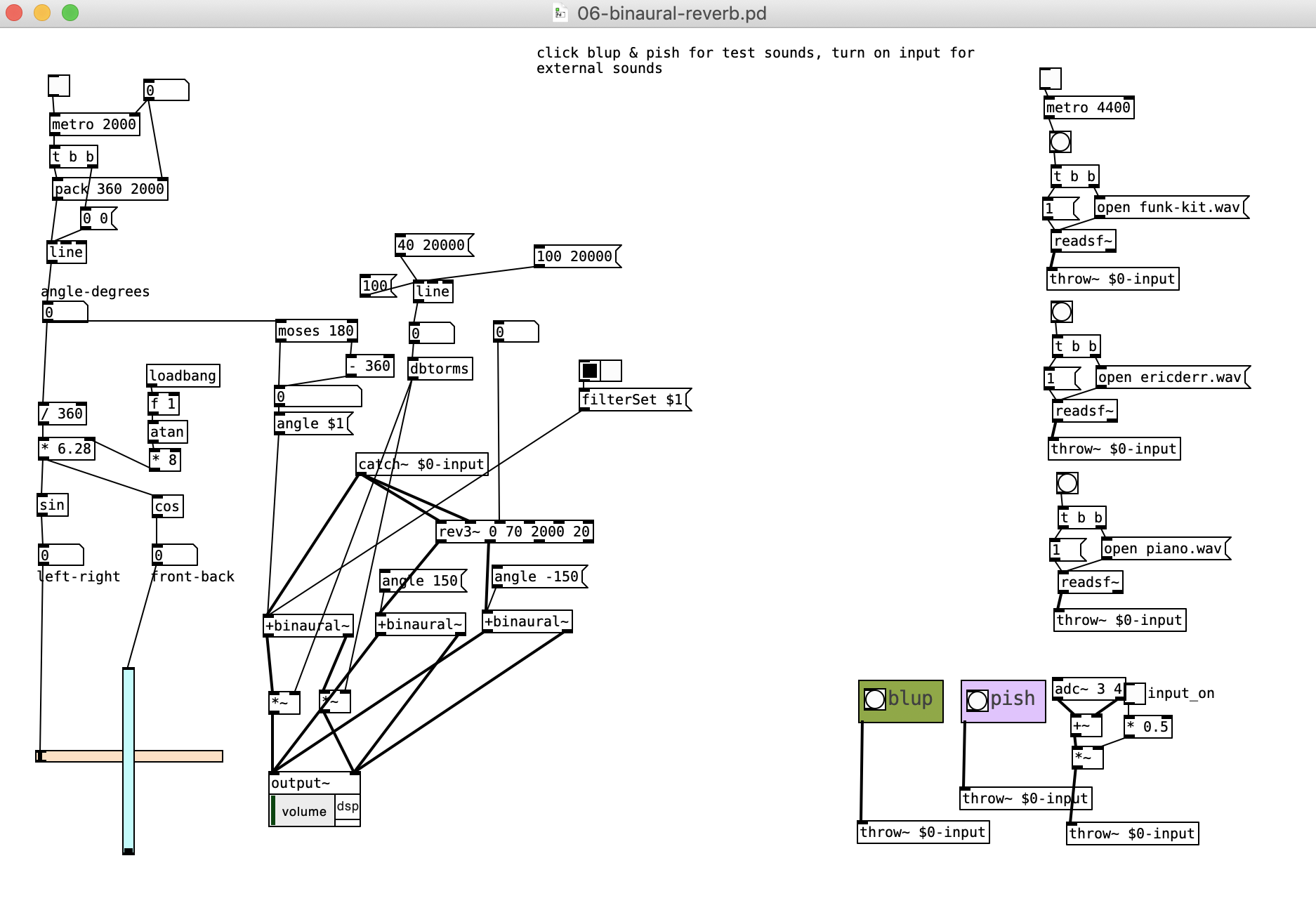

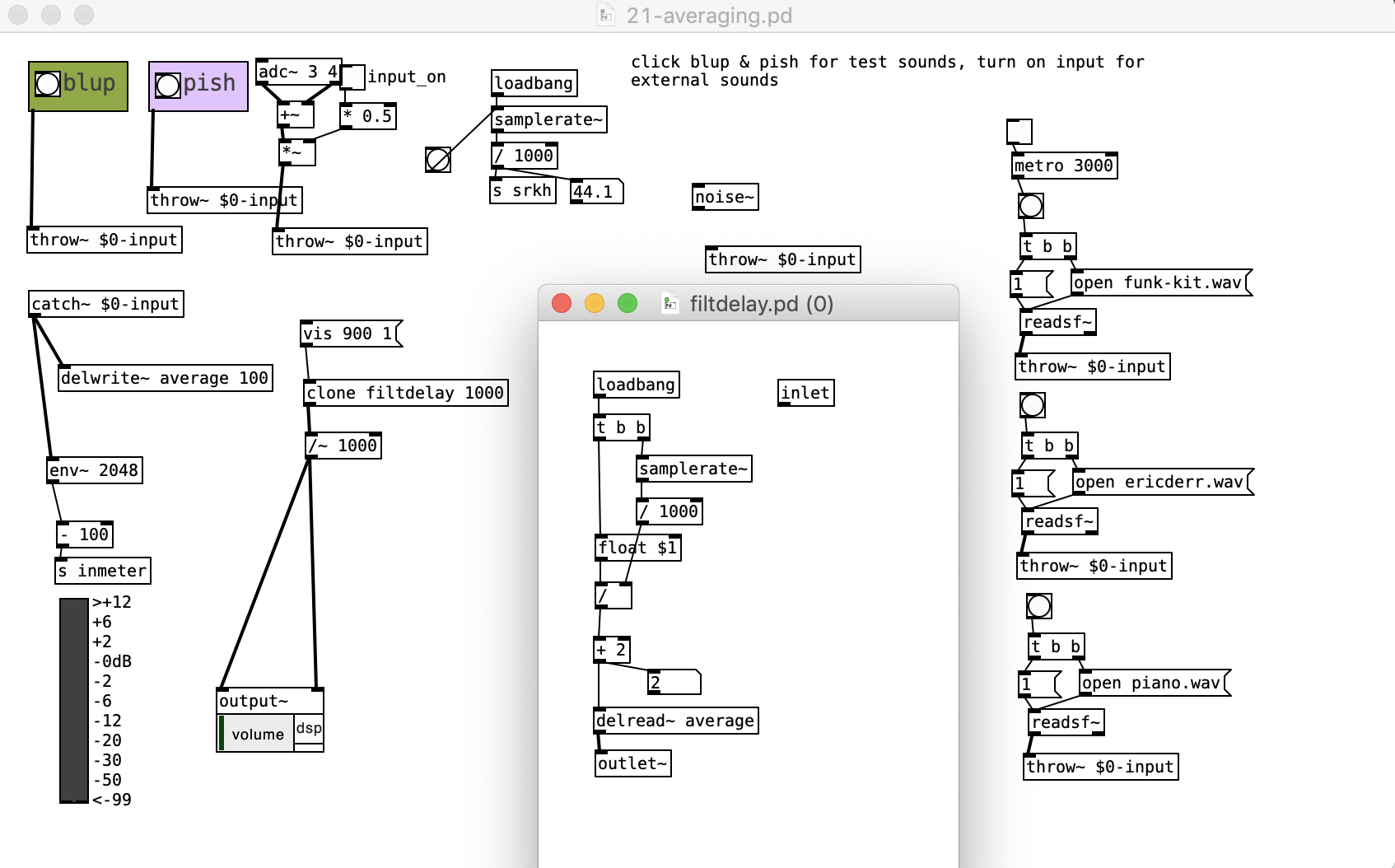

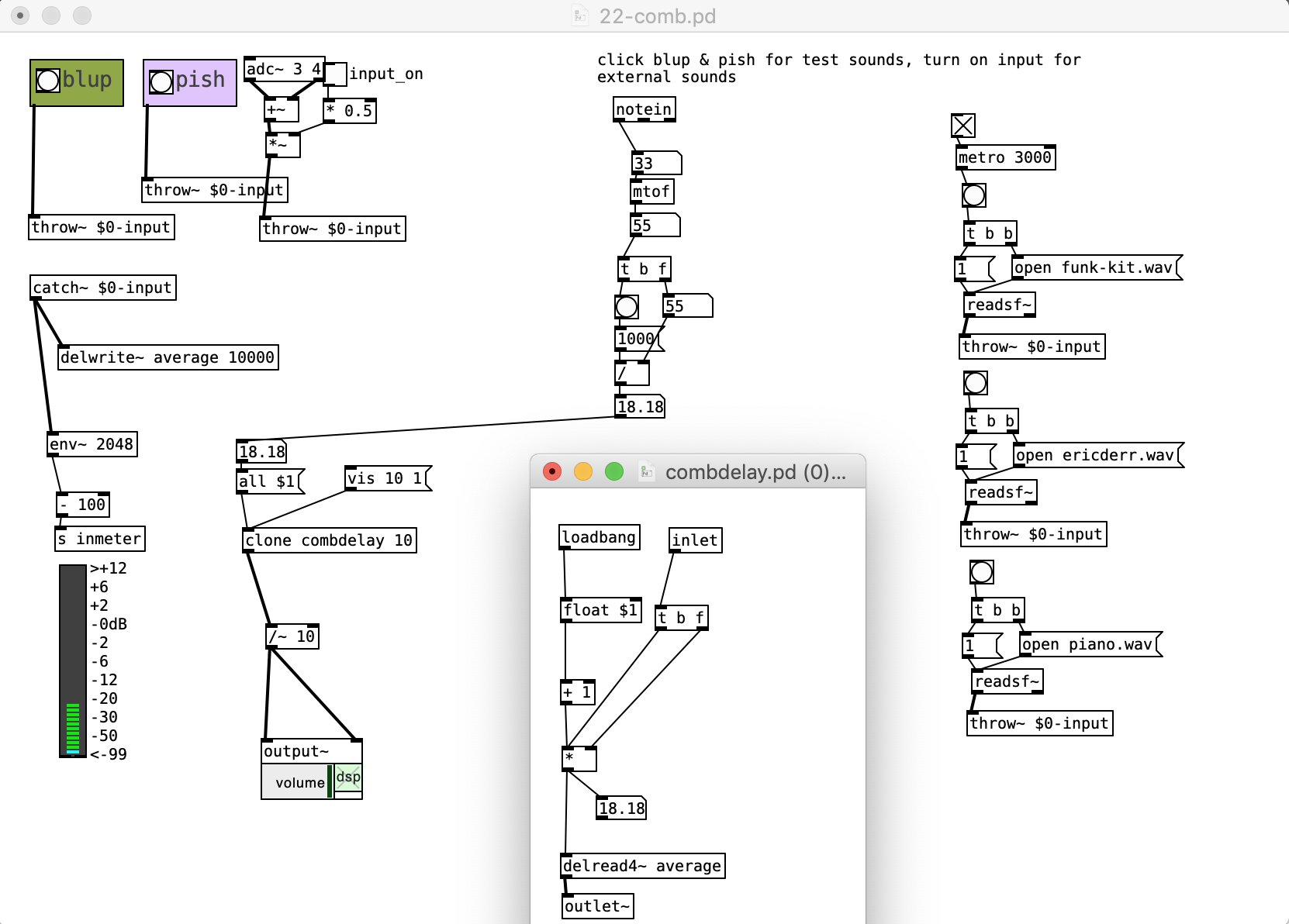

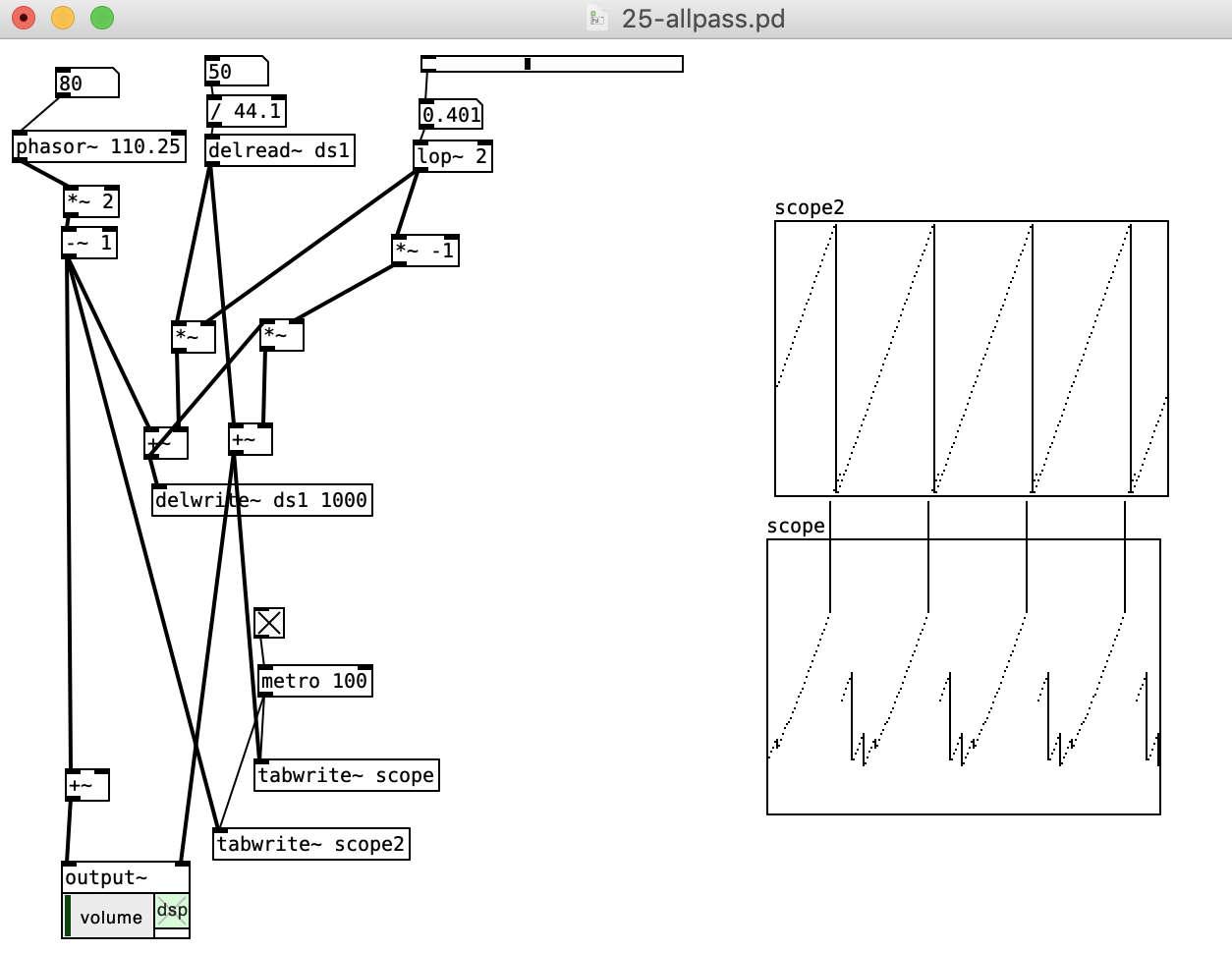

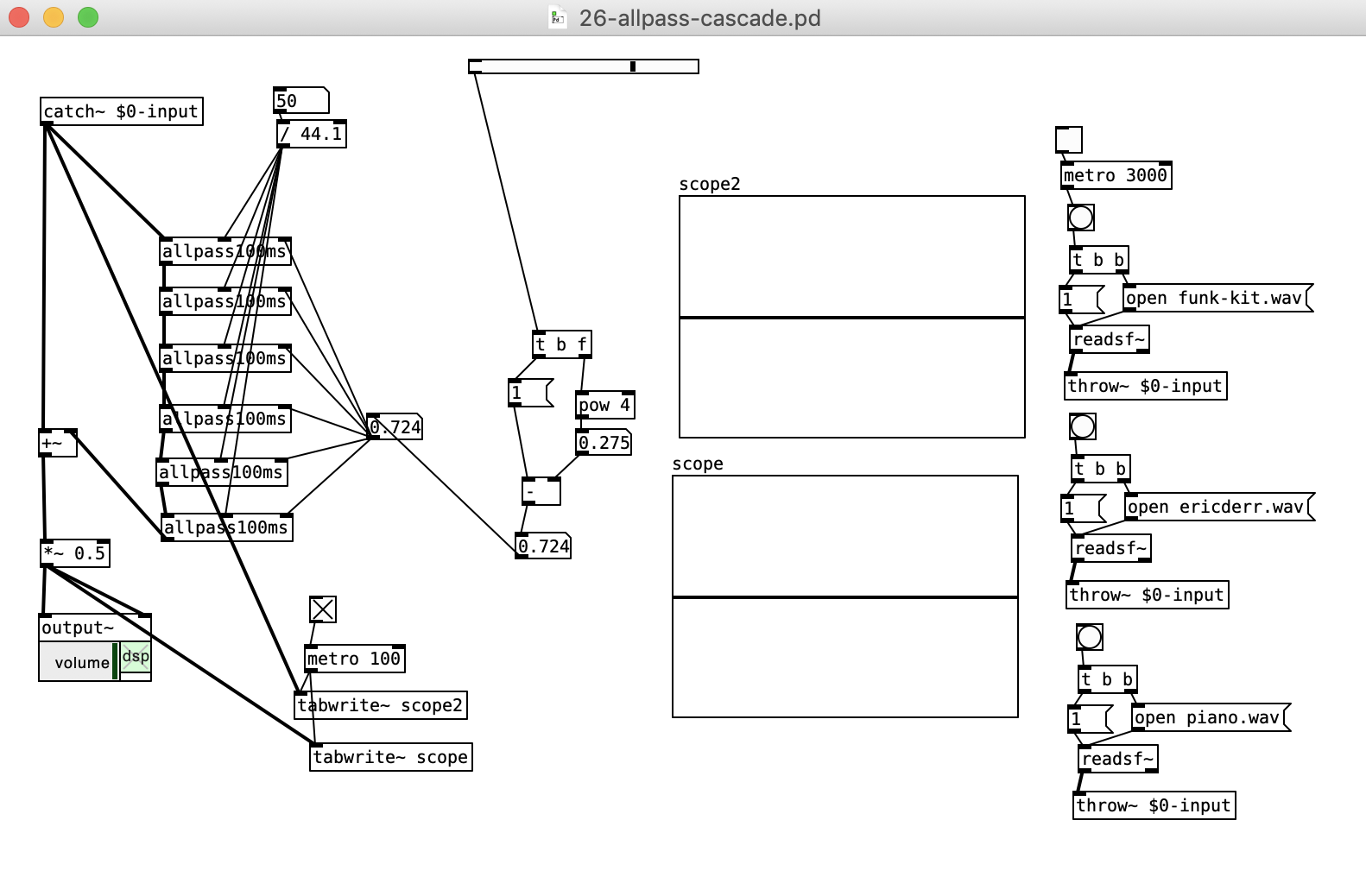

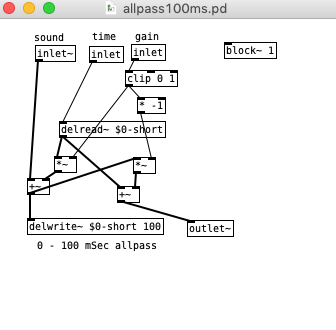

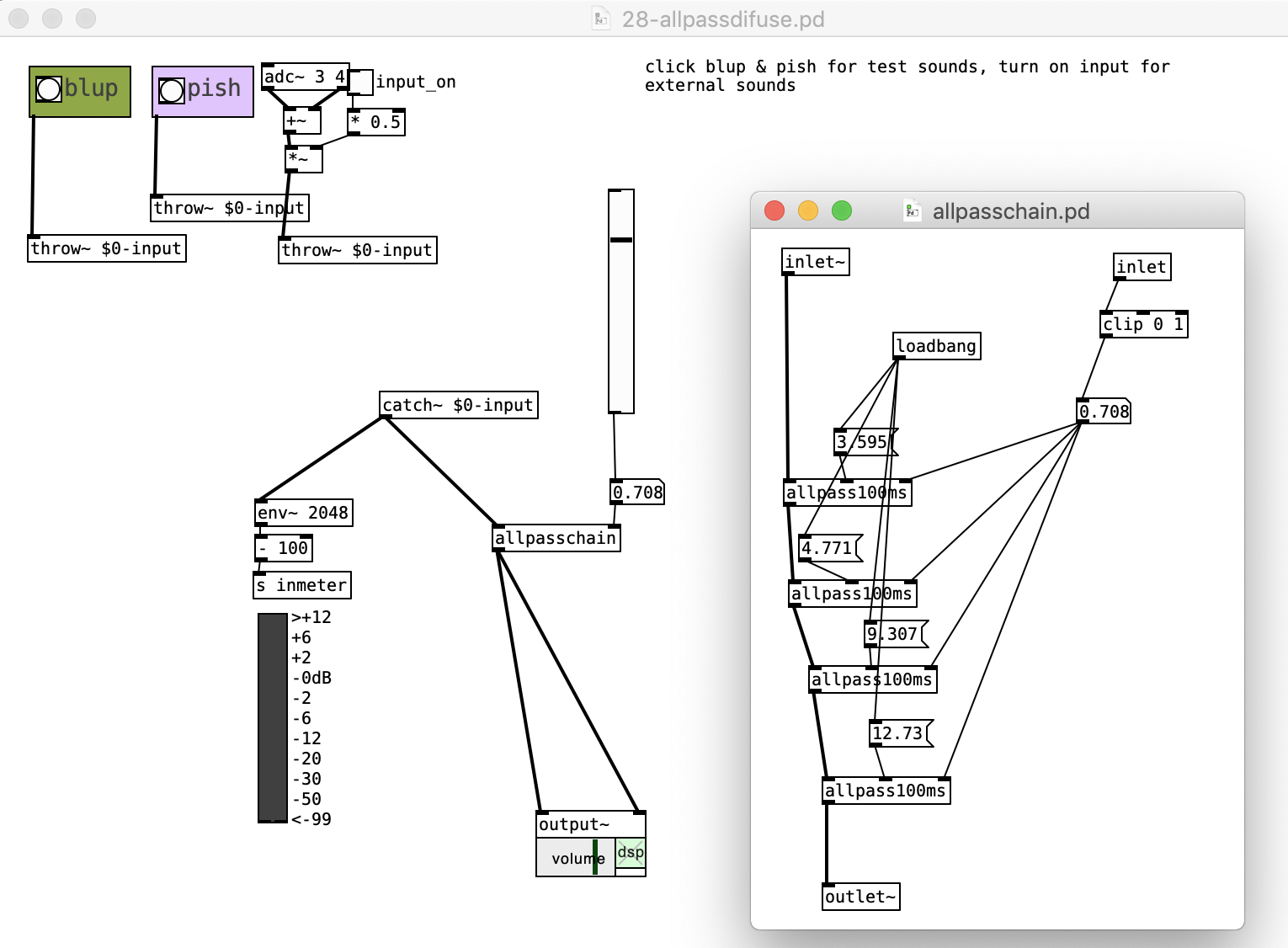

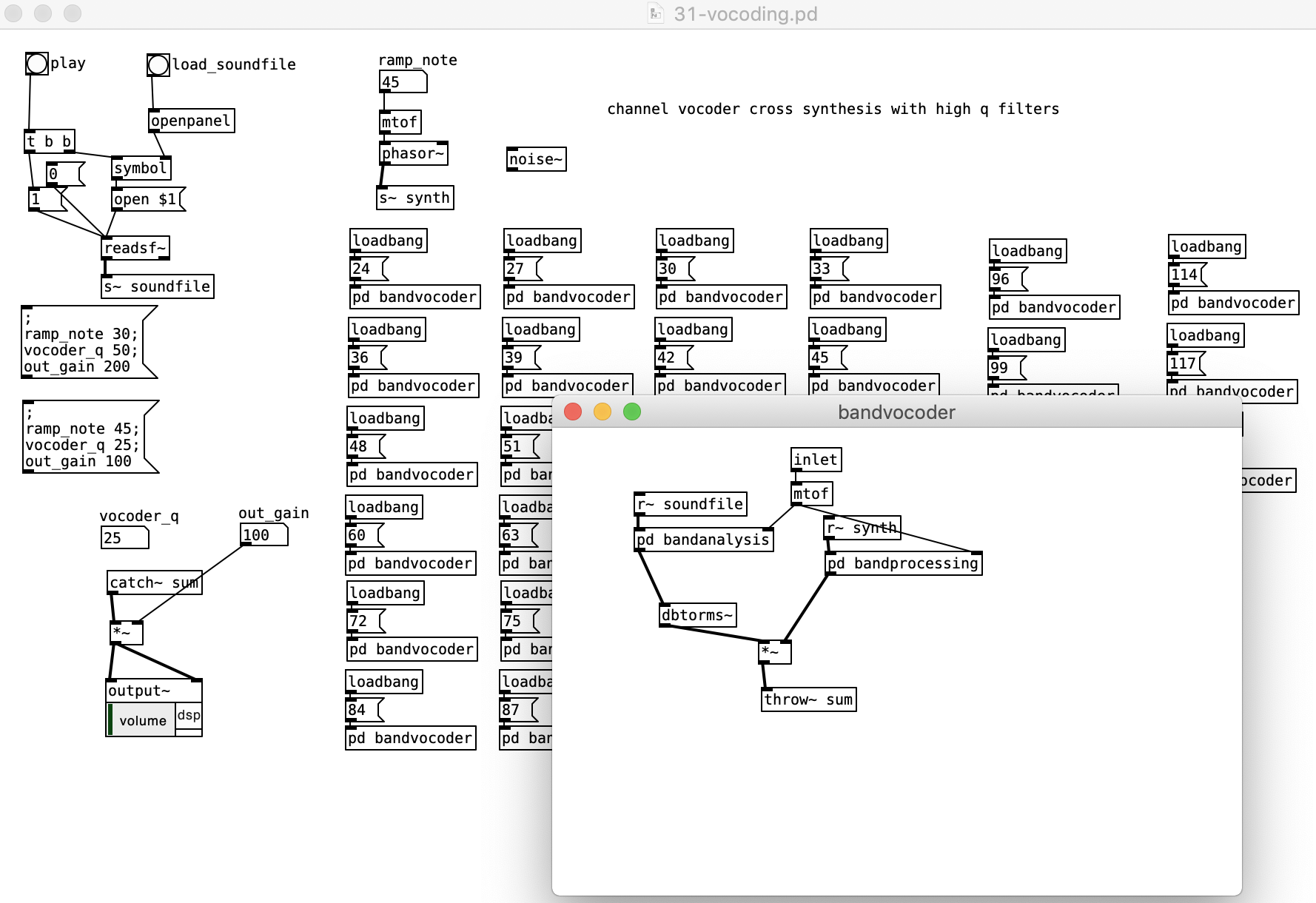

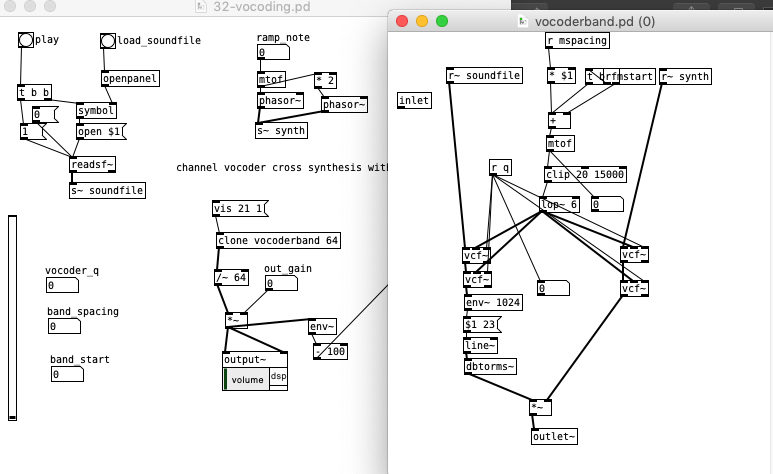

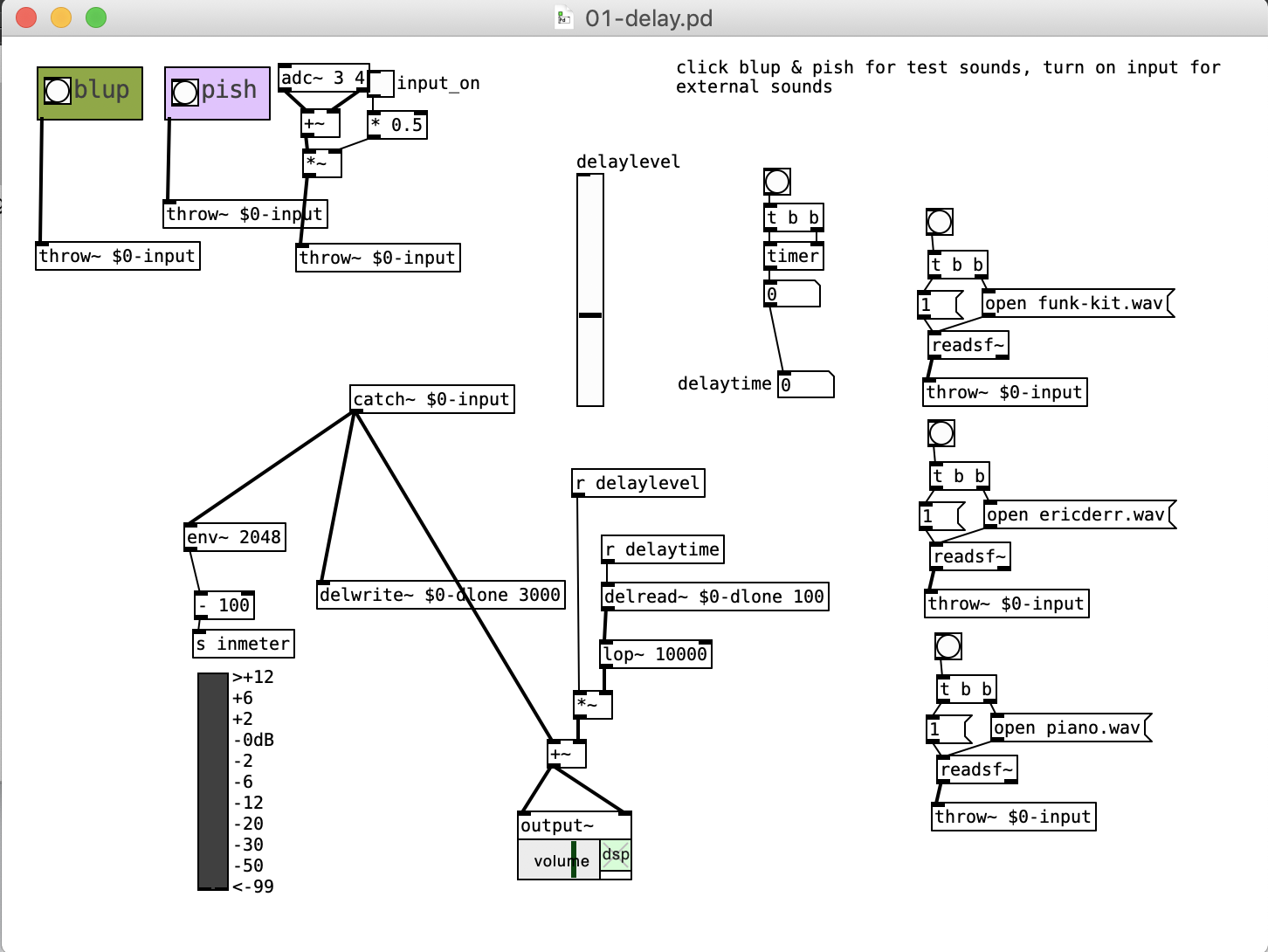

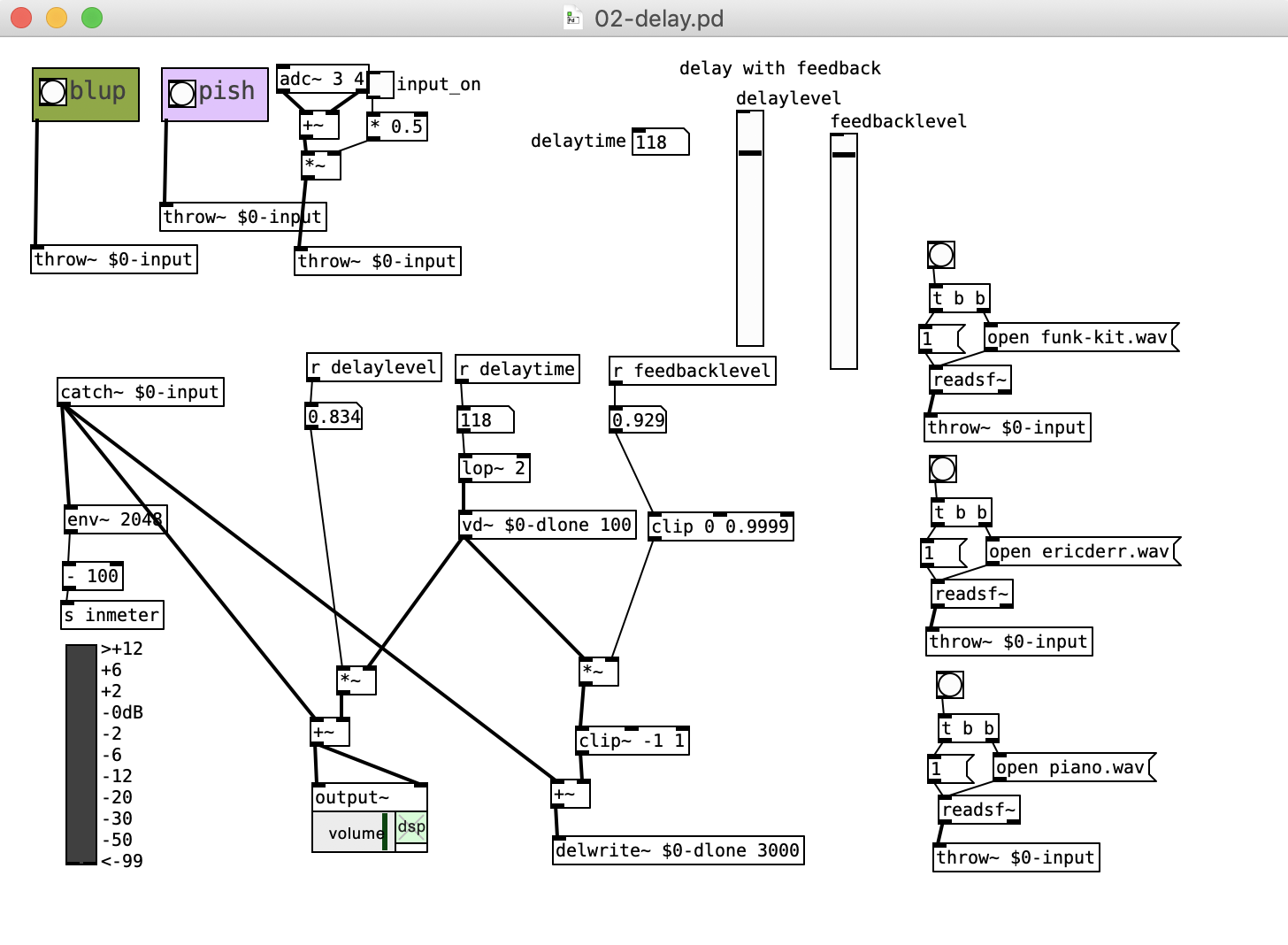

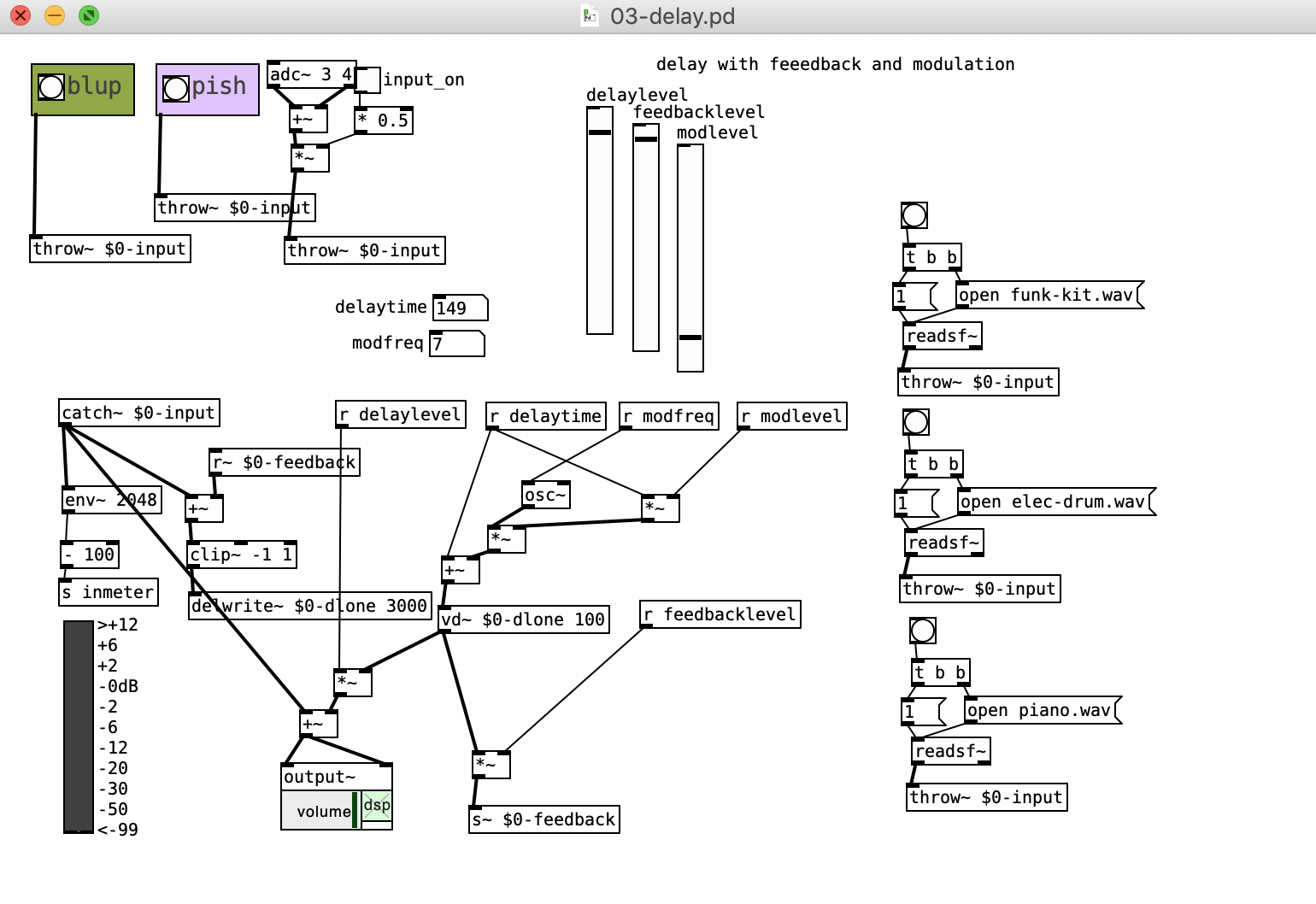

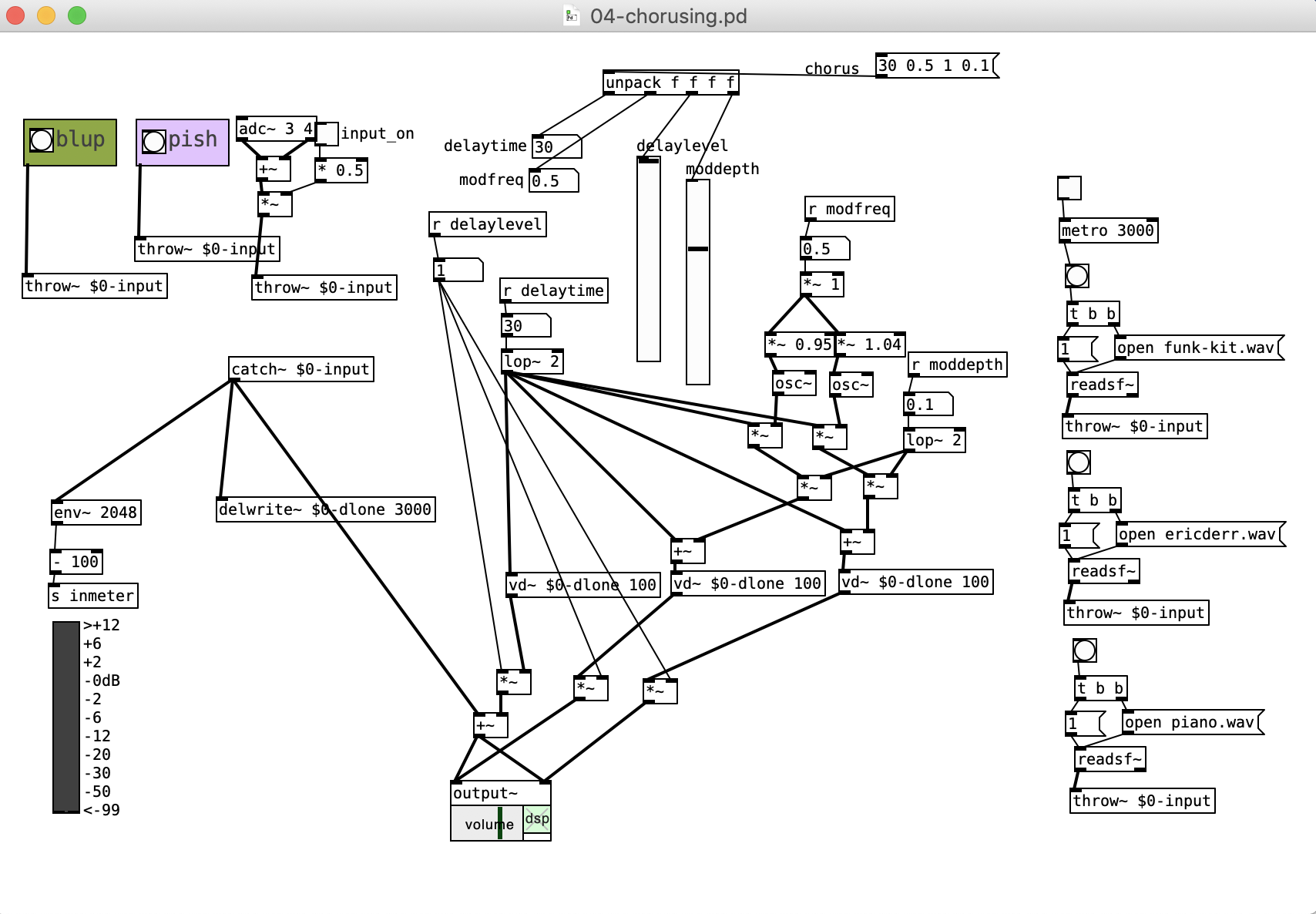

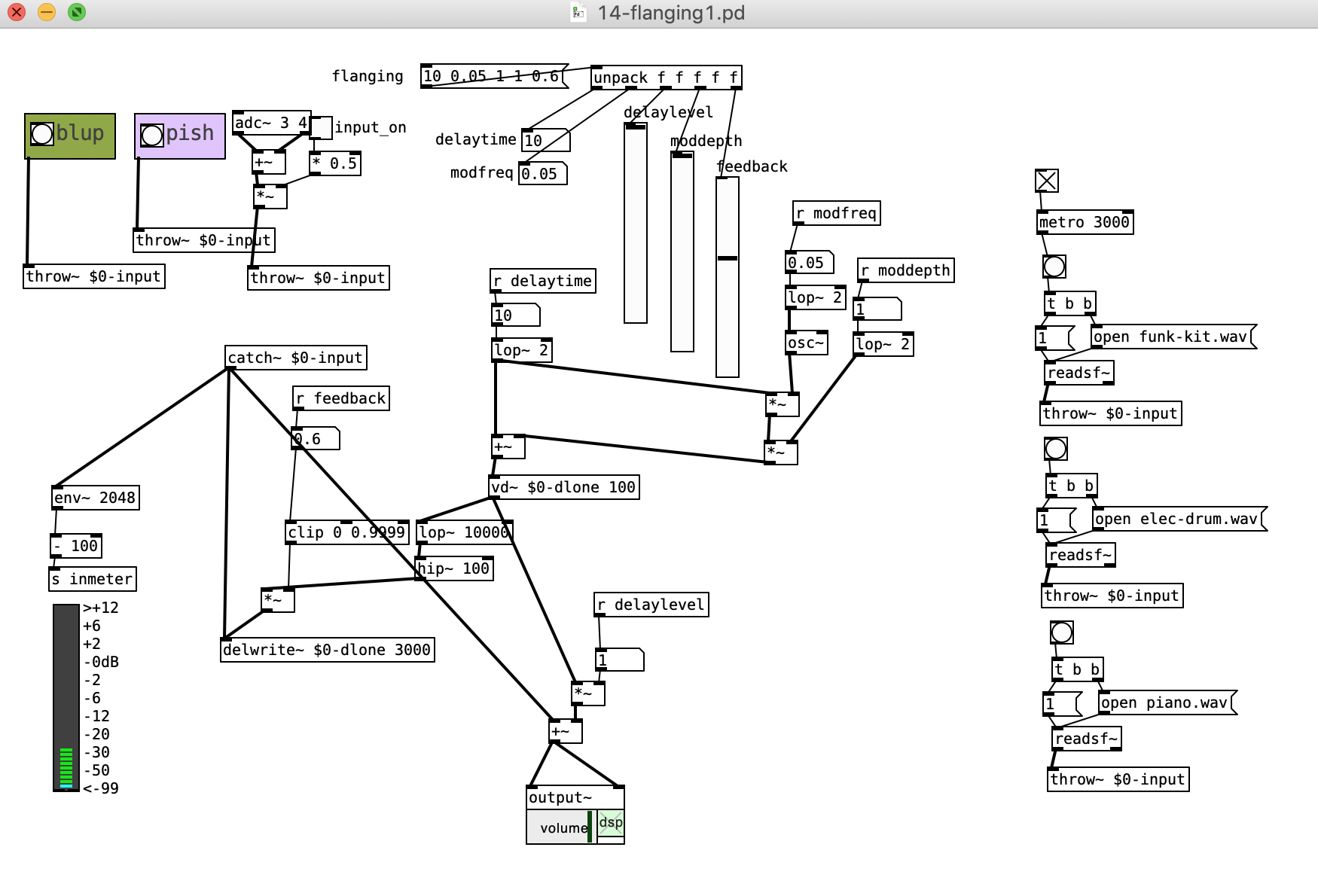

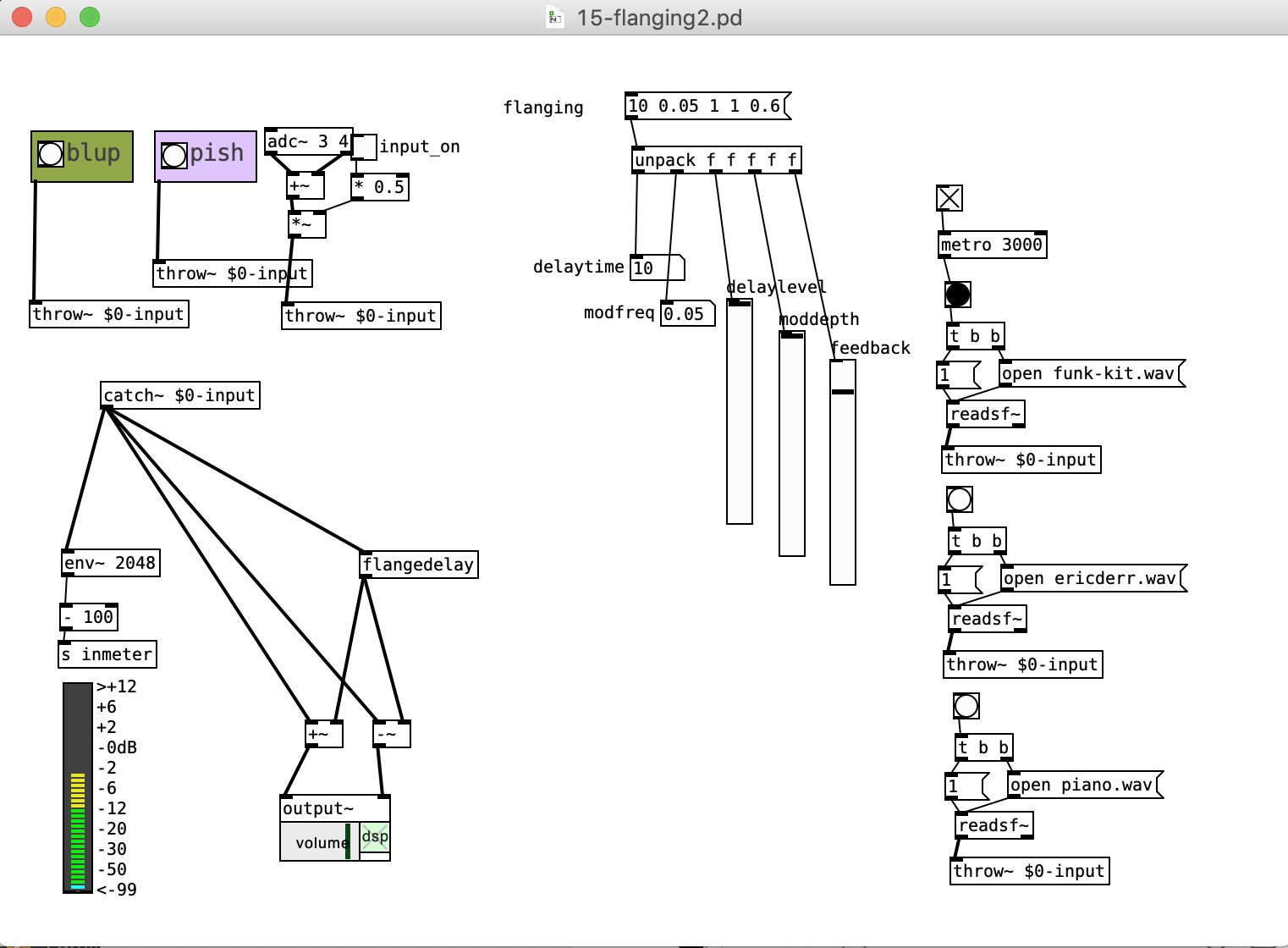

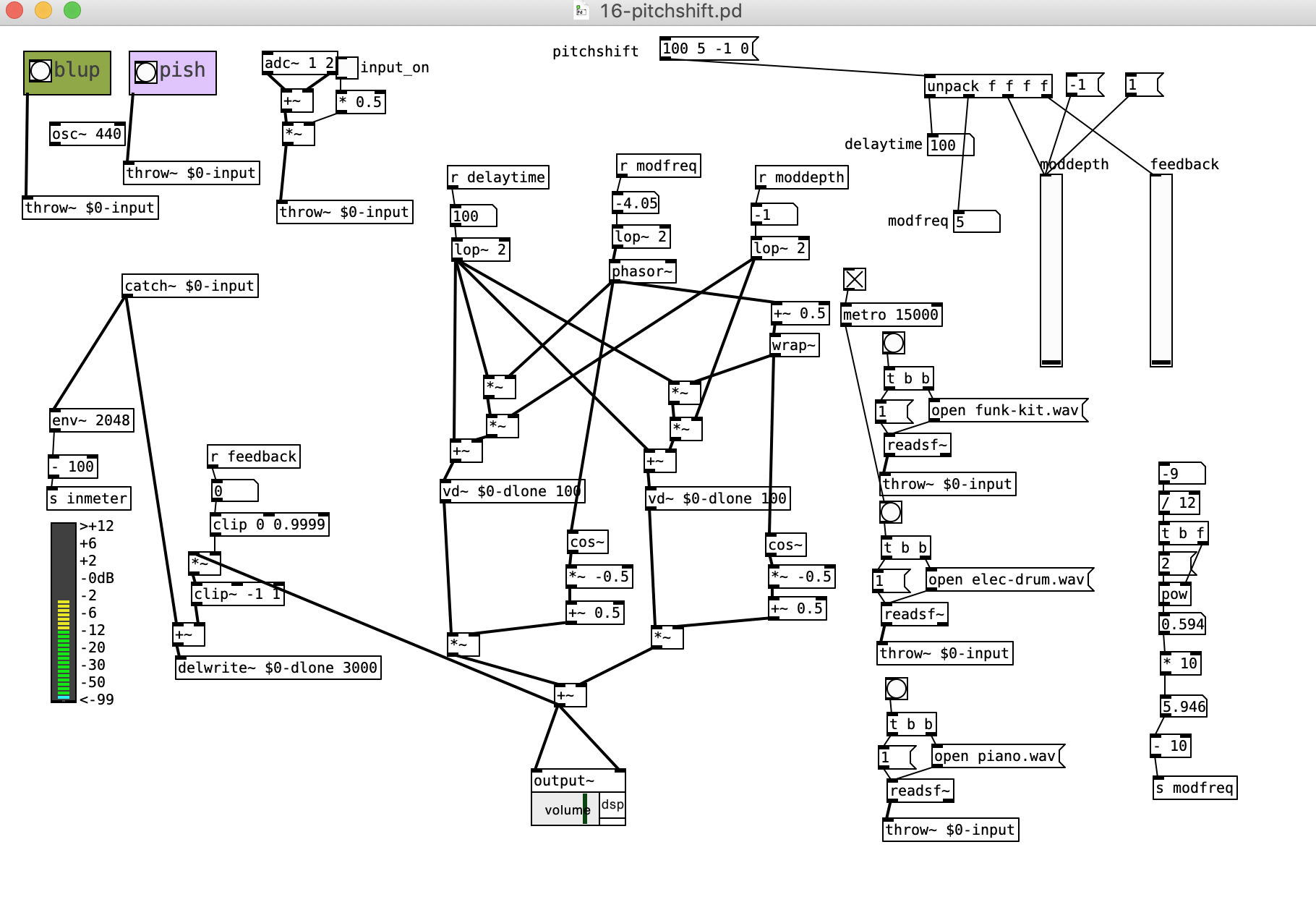

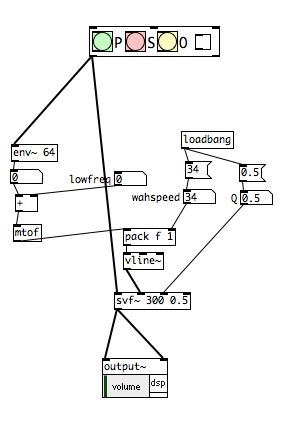

3) 3 effects (e.g. reverb, delay, chorus, flange, pitch-shift, auto-pan, auto-wah, distortion, etc.) where each effect can process either the polyphonic synth, the sample player, both instruments together, or neither instrument (effect disabled).

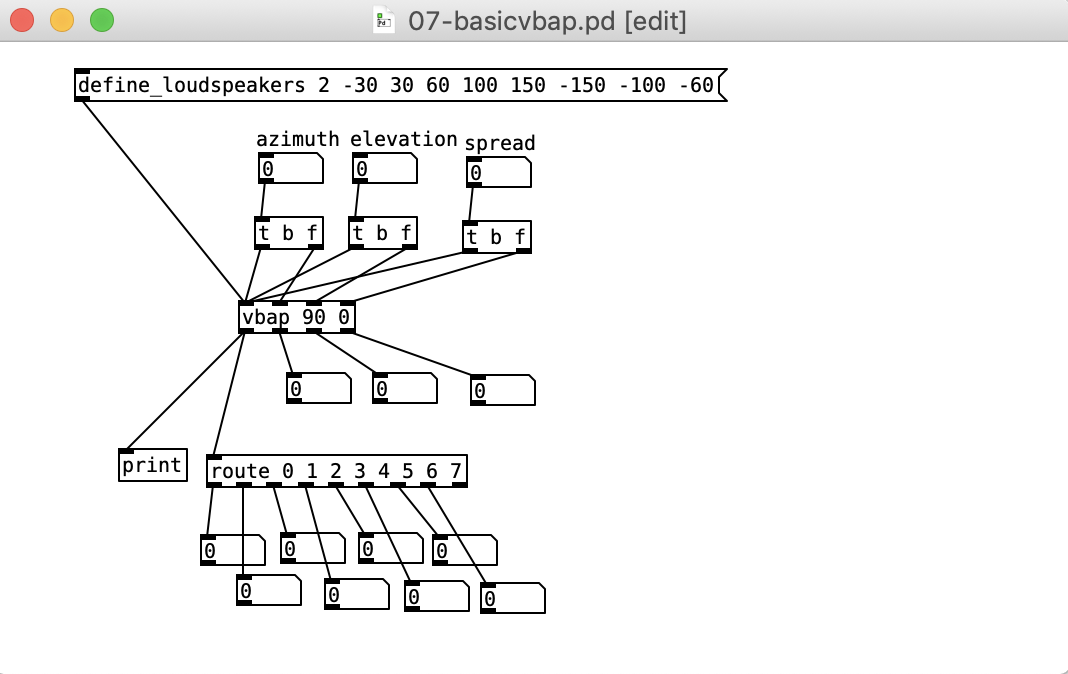

4) use spatial techniques to place each of 1, 2 & 3 in separate spaces

5) all elements of 1-4 need to be controlled from a front panel with well designed and labelled controls

6) either

6a) create a piece that lasts at least 3 minutes and has at least 3 sections using 1-4 above and can be played with qlist

or

6b) perform a piece that lasts at least 3 minutes and has at least 3 sections using 1-4 above

7) present in class either week 10 thursday or during the final (tues 3-6)

8) extra credit: use more than 2 speakers for your spatialization

sound quality, synthesis quality and originality count.

must be handed in before our final (3PM, Tues, June 11th)