music 172 - computer music ii - spring 2021 - zoom class tu/th 2:00-3:20, lab th 3:30 - 4:20 office hour tu/th 3:30-5:00, https://ucsd.zoom.us/my/tomerbe email tre@ucsd.edu tas

Topic, Teaching Schedule and Class Notes

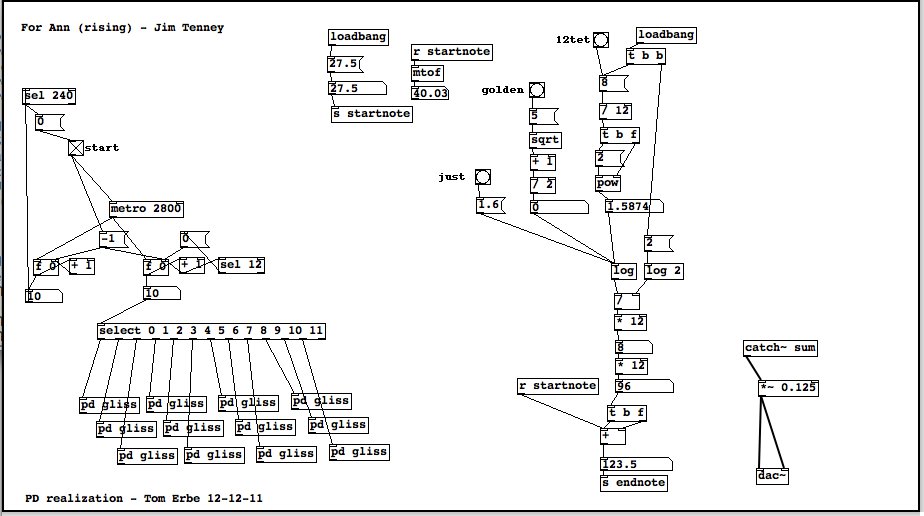

In Music 172 we will be looking at the application of computer music theory in electronic musical instruments, and audio effects. We will cover a wide range of topics, and you will be expected to go further in your own work (through the assignments). As we cover each topic we will look at the signal processing theory behind the technique, and we will discuss how to implement the technique in a way that it is musical and usable. Almost all of the topics in class will be demonstrated using Pure Data. It will help you understand the topic if you create the PD patch as it is being discussed.

The objective of this course is to get a familiarity with the implementation of common computer music techniques, and the theory behind them. Using Pure Data, you will become familiar with a tool which can be used for the prototyping of musical instruments, game sound and sound effects, as well as audio analysis and research into synthesis and audio processing methods.

During the course, the topics covered will likely be:

- drum machines: sampling, looping, granular techniques, modal synthesis

- drum machines: EQ, amplifiers, distortion

- drum machines: sequencing, beat clock, MIDI, controllers

- analog synthesis: oscillators, waveshaping, harmonics

- analog synthesis: modulation, wave-folding

- analog synthesis: filters, polyphony

- effects: delay, chorus, flange, phase shifting

- effects: vocoding, harmonizers

- effects: reverb, spatialization, reverb, vbap, ambisonics

Class notes will be developed as we reach the topics. Class notes will be in the Modules section of canvas.

Grading

- There will be 4 assignments and a final project. Your projects should generally take the form of a piece of music, or a software/equipment design. Regardless, you will need to demonstrate the techniques asked for in the assignment. Quality, design, musicality and originality all count.

- All of your work should be original. If you study with your classmates, you should each complete an original patch. Collaboration is not allowed. Late assignments will be accepted, but will lose 1 point each week (assignments have 15 points). Late assignments can be handed in up to the end of week 10. If you fall behind, please ask for help! Assignments should be ZIP compressed, include all patches, abstractions, externals and sound files. They should be handed in via Canvas.

- You will get a participation score based on the number of on-topic questions you ask during class (chat questions count). If you are asynchronous, you may use canvas to submit your questions. 10 questions are required for the quarter.

- If you would rather use Max/MSP, you may use it instead of Pure Data for your assignments.

Percentage breakdown:

60% – 4 assignments (15 points each)

30% – final project

10% – attendance and participation

Text and Required Materials

The Theory and Technique of Electronic Music – Miller Puckette (required & online: http://msp.ucsd.edu/techniques.htm)

Designing Sound: Andy Farnell (recommended: http://aspress.co.uk/ds/about_book.html)

Pure Data (required software: http://msp.ucsd.edu/software.html)

abstractions to download (this will increase over the quarter)