I have been looking at James Tenney’s documentation for Analog #1 – Noise Study in “Computer Music Experiences 1961-1964.” I am hoping to recreate the instrument that Tenney used in this piece. As it was completed in 1961, the software he used was probably closer to Music III than it was to Music IV, though it seems he describes the instrument in Music IV terms.

In the “CME” document Tenney shows a unit generator (“U5”) called RANDI with two inlets, with the left controlling amplitude and the right controlling frequency or period. RANDI is a interpolating random number generator, that generates numbers at an audio rate. This is probably the same unit generator that is called RAND in Max Mathews’ article “An Acoustic Compiler for Music and Psychological Stimuli”. In Tenney’s 1963 article “Sound-Generation by means of a Digital Computer” he describes a RAND and a RANDX. RAND is the interpolating random generator, and RANDX holds the random value. In the “Music IV Programmer’s Manual”, the two functions are RANDI and RANDH (interpolation and hold).

There are two parameters for all of these unit generators. The left input is the limit of the random number generation or amplitude. The right inlet is described as a bandwidth control in the Music III document, where bandwidth = sample-rate/2 x right-inlet/512. If this inlet varies from 0 to 512, the bandwidth would vary from 0 to the nyquist rate. It is also described in the Music IV manual as a control of the rate of random number generation, where a new random number is generated every 512/right-inlet samples. This is pretty consistent through-out all of the documents, with one exception in Tenney’s 1963 article (figure 13) where he implies that the right inlet I controls period (period is 512/I).

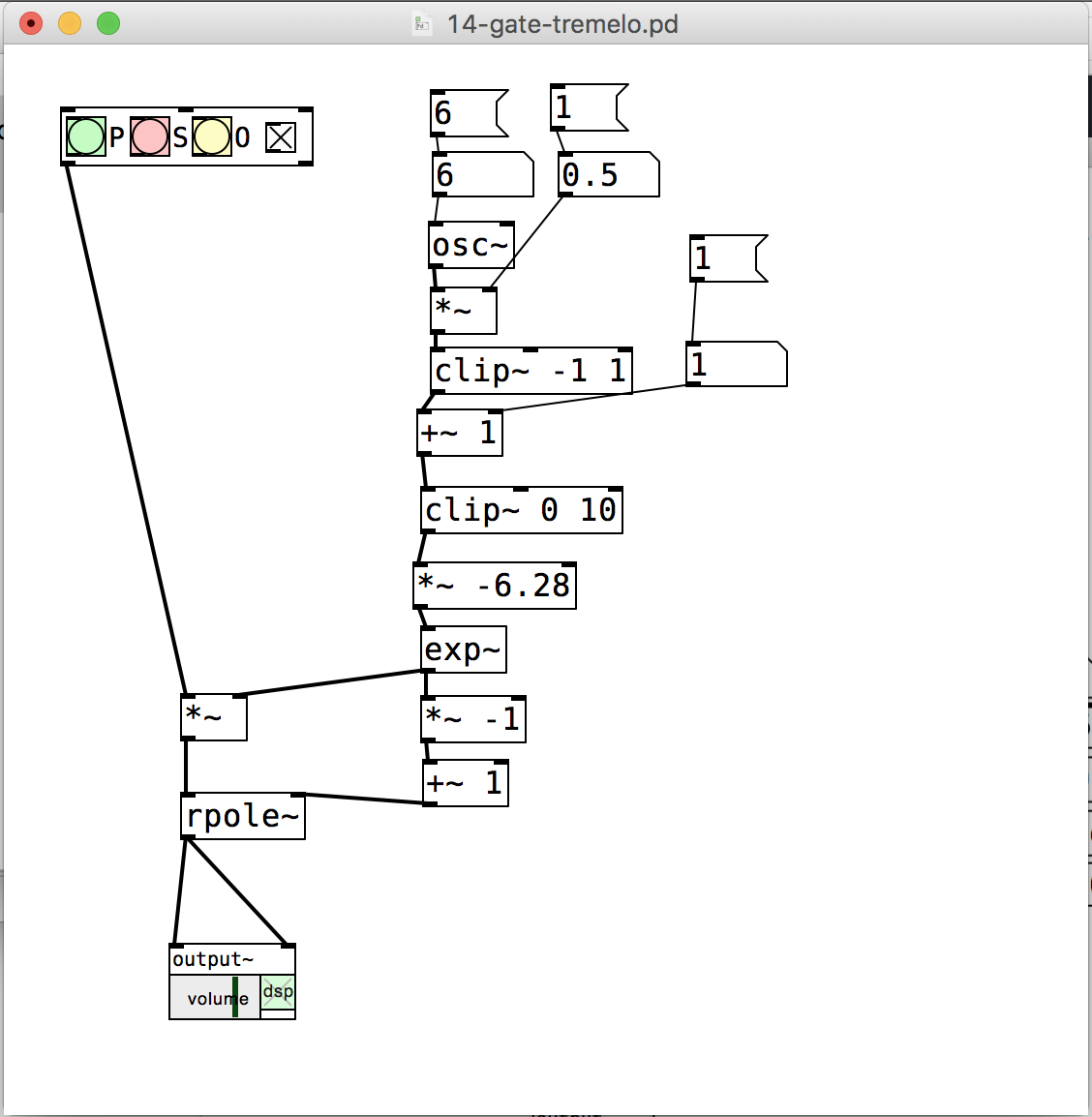

So to emulate RAND/RANDI, I need to have two inlets – one which determines amplitude (I1), and the other which determines frequency (I2), where frequency is in a linear scale from 0 to 512, and 512 corresponds to a new random number generated every sample. Looking at James Tenney’s various example patches, with continuous envelopes sent into the inlets, it seems likely that floating point numbers were used for both of these inlets.

This untested code will generate a new random number that goes from i1 to -i1 every 512/i2 samples. This is what is implied by the Music III and IV documentation, but I can’t be sure of the method of random number generation. Also, like the originals, this is sample rate dependent. It will only act like the original code if run at a sample rate of 10,000 Hz.

static t_int *randi_perform(t_int *w)

{

t_randi *x = (t_randi *)(w[1]);

t_float *freq = (t_float *)(w[2]);

t_float *out = (t_float *)(w[3]);

int n = (int)(w[4]);

int blocksize = n;

int i, sample = 0;

float phaseincrement;

float findex;

int iindex;

while (n--)

{

// first we need to calculate the phase increment from the frequency

// and sample rate - this is the number of cycles per sample

// freq = cyc/sec, sr = samp/sec, phaseinc = cyc/samp = freq/sr

if(*(freq+sample) != 0.0f)

phaseincrement = *(freq+sample)/x->samplerate;

else

phaseincrement = x->x_f/x->samplerate;

// now, increment the phase and make sure it doesn't go over 1.0

x->phase += phaseincrement;

while(x->phase >= 1.0f)

{

x->phase -= 1.0f;

x->previous = x->current;

x->current = random();

x->current = (x->current/1073741824.0f) - 1.0f;

}

while(x->phase < 0.0f)

{

x->phase += 1.0f;

x->previous = x->current;

x->current = random();

x->current = (x->current/1073741824.0f) - 1.0f;

}

*(out+sample) = x->previous + x->phase * (x->current - x->previous);

sample++;

}

return (w+5);

}

My next step is to build this into a PD external and test in a patch similar to what is used in Tenney’s “Noise Study”.

Update – 11/12/14

I have implemented the noise generator above, and have inserted it into a PD patch (I have edited the real code into this article). The results sound very much like Noise Study. Here is a single voice

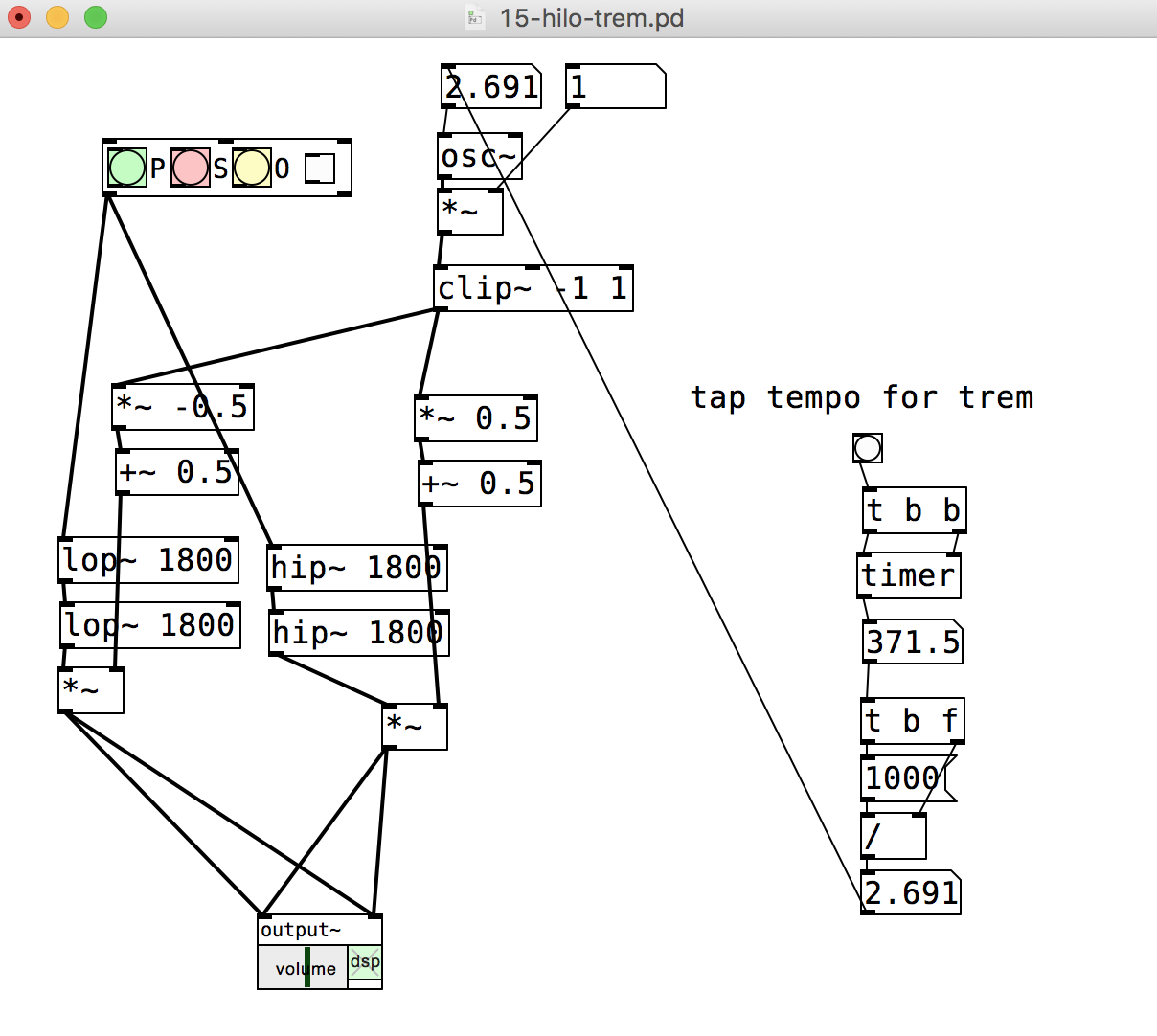

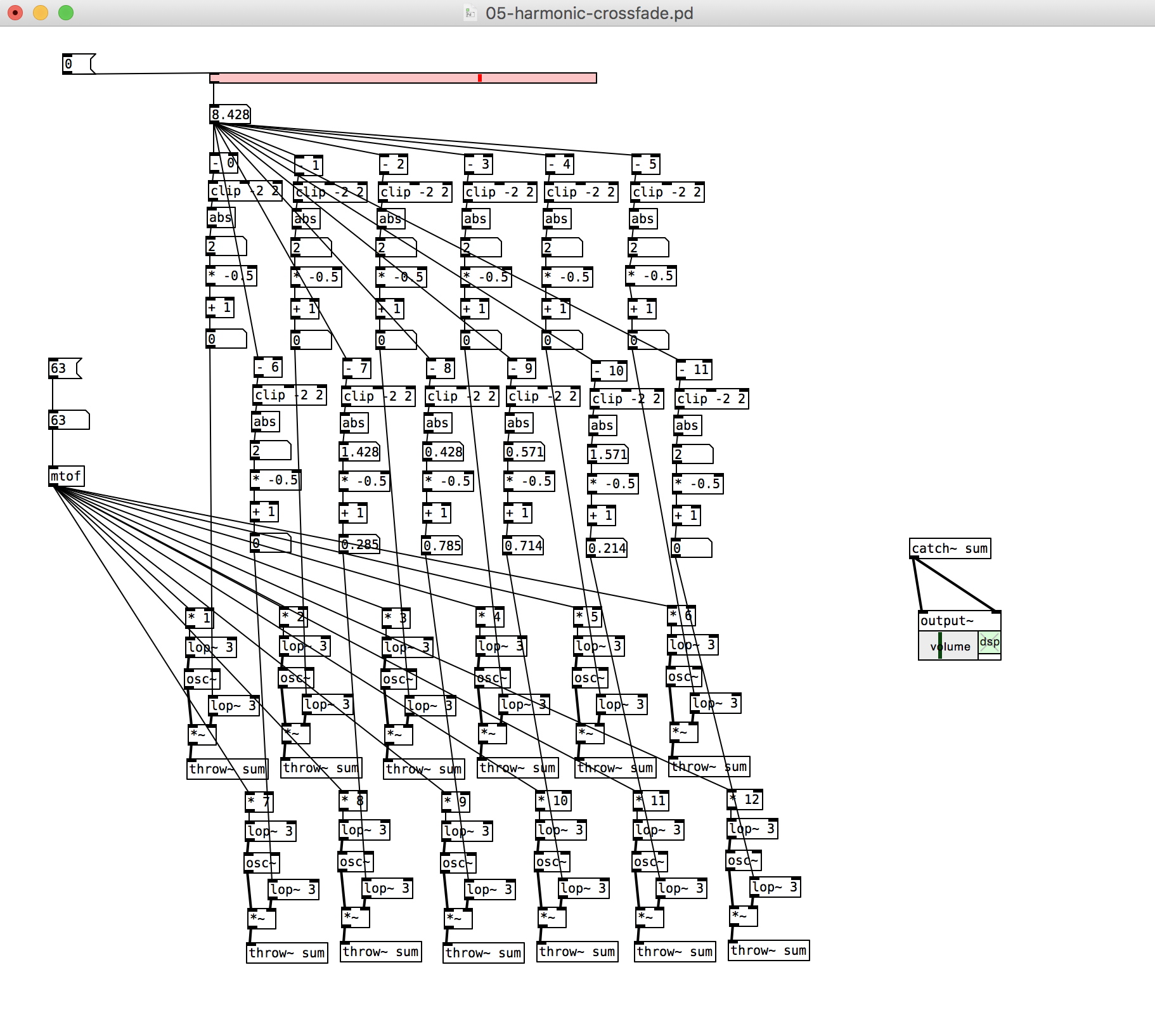

Now to determine the overall structure.